I recently had the opportunity, or the critical need rather, to optimize the boot time of some applications on an iMX6ULL processor. For anyone unfamiliar with this processor, it is a single core ARM processor running a Cortex-A7 core at up to 900 MHz. It provides a number of common interfaces for a microprocessor, including 16-bit DDR, NAND/NOR flash, eMMC, Quad SPI, UART, I2C, SPI, etc.

This particular implementation is running a recent Linux kernel with multiple Docker applications to execute the business logic. The problem was that the time from power on to the system being ready and available was over 6 minutes! Obviously, this was a huge issue and not acceptable performance from a user perspective, so I was tasked with reduce that boot time. I was not given any acceptable performance numbers, so I started by just doing the best that I could with the hardware.

TL;DR

By optimizing the kernel configuration and boot time of my applications, I was able to cut the boot time in half. Through this process, I was also able to find some issues in the hardware design of our board that we were able to address to improve read/write speeds to our eMMC.

In total, I was able to shave over three minutes off of our boot time. It still is not optimal, but I am waiting on the hardware rework before continuing to see how much more is required.

<insert a table showing gross estimates of time saved in kernel (drivers/modules), systemd (removing services not required), docker apps (upgrading docker to go version from python/rewriting plugin in rust)>

| Boot/Initialization Period | Original Time | Optimized Time |

|---|---|---|

| Bootloader and Kernel Load | 30 seconds | 22 seconds |

| systemd Service Initialization | 5 minutes 30 seconds | 2 minutes 30 seconds |

Approach

My typical approach to optimizing a Linux system always starts by working to optimize the kernel. This can usually save only a few seconds of boot time, but it was what I was most familiar with, so that is where I began.

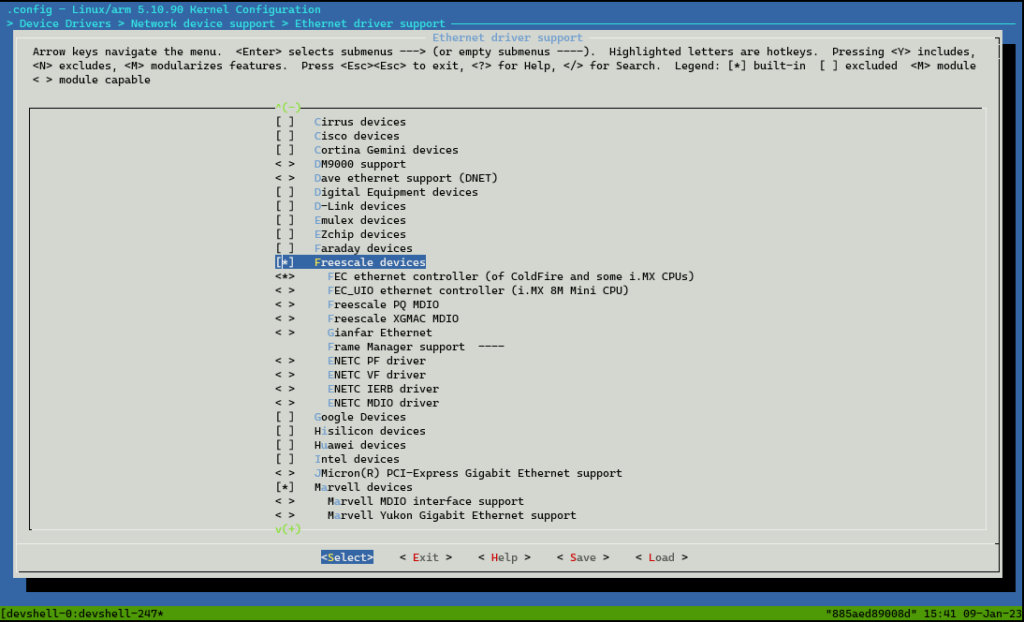

The Linux kernel is designed to allow various drivers and features to be enabled or disabled at runtime via kernel modules. However, depending on your system and requirements, have everything available in the kernel by way of modules can significantly slow down your kernel load times. My approach when working in embedded systems with custom hardware is to optimize the kernel configuration in such a way that all the necessary drivers are built into the kernel and all critical features are also built into the kernel. Everything else that may be needed later in runtime by applications can be left as a kernel module. All features that are definitely not required are completely removed.

Please note that there is a fine line here when optimizing your kernel. If you make everything built in, that bloats the size of your kernel, which means it will take longer to read from disk. So leaving some things as modules is okay to keep your kernel size smaller, this optimizing load time. But if you have too many modules, those have to be loaded before the rest of the system can be loaded that depends on them, so there is a balance to be struck.

After I focused on the kernel, I turned my eye toward optimizing the run time and boot applications. Our system made use of systemd to manage system initialization, so it was clear that the right tool to use was going to be systemd-analyze. This is an extremely useful tool to use when you need to see how all the services interact one with another during initialization. You can get a list of how long each service took during the initialization process, view a graph of how those are all related, and even see the critical chain of services through initialization. The typical process to optimize your initialization is two-fold: a) identify what services are being run that you do not require and can turn off, and b) identify what services are taking an abnormal amount of time, so you can optimize those specific services.

Finally, after the kernel was optimized, and I had removed all the unnecessary services, I was able to focus on the few services and applications that were simply taking forever to start up.

Kernel Optimization

In order to optimize the kernel, I had to make use of a few commands in the Yocto build system to manipulate the kernel configuration. In Yocto, most systems make use of kernel configuration fragments, which are a really easy and clean way to manage certain features and drivers in a hardware and kernel agnostic fashion.

The way this works is that your primary kernel recipe in Yocto will manage a default kernel configuration that defines typical features and drivers for that version of the kernel. This primary layer typically will come from the microprocessor vendor, in this case from NXP. Your hardware layer will provide configuration fragments that override those features. Finally, you can have additional meta layers that provide additional fragments if necessary. You can read more about kernel configuration in the Yocto Project documentation here.

bitbake linux-lmp-fslc-imx-rt -c menuconfig

With dealing with optimizations, however, you are typically overriding most everything in the default configuration. Rather than editing the defconfig file itself, or providing an entirely new one, I stuck with the fragment approach.

# Create a kernel configuration from the default configuration

# (i.e., build the kernel recipe through the configure step)

bitbake linux-yocto -c kernel_configme -f

# Make the necessary configuration changes desired for the fragment

bitbake linux-yocto -c menuconfig

# Exit menuconfig, saving the configuration

# Create the fragment

bitbake linux-yocto -c diffconfig

# Copy the fragment to your repository and add to your recipe(s)Code language: PHP (php)You can read more about creating kernel configuration fragments in this quick tip.

When editing the kernel configuration via menuconfig, I made all my desired changes and then generated the configuration fragment and built the kernel. This resulted in many QA warnings about certain features being defined in the configuration chain, but did not end up in the final configuration. Those warnings simply mean that the build system is having a hard time verifying what the correct configuration should be. This is usually because your defconfig file contains one CONFIG_*, but that is not part of the final configuration because you implicitly removed it (i.e., you removed a feature that the specific configuration depends on). To address this, simply take the CONFIG_* option mentioned in the QA warning and drop that into your optimization fragment with a “=n” at the end to explicitly disable it.

Initialization Optimization via systemd-analyze

systemd is a popular choice for managing system components in Linux systems. Most all modern Linux systems make use of it in one way or another. It provides a wide range of tools to help administrators manage their systems as well.

In my case, since my system was using systemd to manage all the run time services and initialization, I was able to make use of the systemd-analyze tool. Here is a short snippet from the man page:

systemd-analyze may be used to determine system boot-up performance statistics and retrieve other state and tracing information from the system and service manager, and to verify the correctness of unit files. It is also used to access special functions useful for advanced system manager debugging.

For this exercise, I made use of the commands blame, plot, dot, and critical-chain.

To start my debug process, I wanted to know at a high level what services were taking the longest to initialize. To do this, I made use of the blame and plot commands.

systemd-analyze blame will look at all the services that systemd manages and provide a sorted list and the amount of time they took to initialize. This was exactly the kind of information I was after and gave me a starting point in my search for what to optimize. However, when looking at this data you have to be a little careful, because services are many times interdependent. The initialization time of one service could be really long because it cannot finish initializing until another service is also complete with its own initialization.

user@localhost:~$ systemd-analyze blame

22.179s NetworkManager-wait-online.service

21.986s docker-vxcan.service

19.405s docker.service

14.119s dev-disk-by\x2dlabel-otaroot.device

12.161s systemd-resolved.service

10.973s systemd-logind.service

10.673s containerd.service

9.702s systemd-networkd.service

9.443s systemd-networkd-wait-online.service

6.789s ModemManager.service

5.690s fio-docker-fsck.service

5.676s systemd-udev-trigger.service

5.552s systemd-modules-load.service

5.127s btattach.service

5.062s user@1001.service

4.670s sshdgenkeys.service

3.793s NetworkManager.service

3.780s systemd-journald.service

2.945s systemd-timesyncd.service

2.837s bluetooth.service

2.409s systemd-udevd.service

2.084s zram-swap.service

1.677s systemd-userdbd.service

1.621s avahi-daemon.service

1.284s systemd-remount-fs.service

1.080s dev-mqueue.mount

1.025s sys-kernel-debug.mount

1.015s modprobe@fuse.service

1.010s sys-kernel-tracing.mount

1.004s modprobe@configfs.service

1.004s modprobe@drm.service

997ms kmod-static-nodes.service

871ms systemd-rfkill.service

832ms systemd-journal-catalog-update.service

732ms systemd-tmpfiles-setup.service

668ms systemd-sysusers.service

592ms systemd-user-sessions.service

562ms systemd-tmpfiles-setup-dev.service

533ms ip6tables.service

507ms iptables.service

464ms systemd-sysctl.service

390ms systemd-journal-flush.service

364ms systemd-random-seed.service

359ms systemd-update-utmp-runlevel.service

346ms systemd-update-utmp.service

318ms user-runtime-dir@1001.service

232ms tmp.mount

220ms var.mount

219ms sys-fs-fuse-connections.mount

210ms var-rootdirs-mnt-boot.mount

207ms systemd-update-done.service

201ms var-volatile.mount

165ms sys-kernel-config.mount

147ms docker.socket

140ms sshd.socket

134ms ostree-remount.service

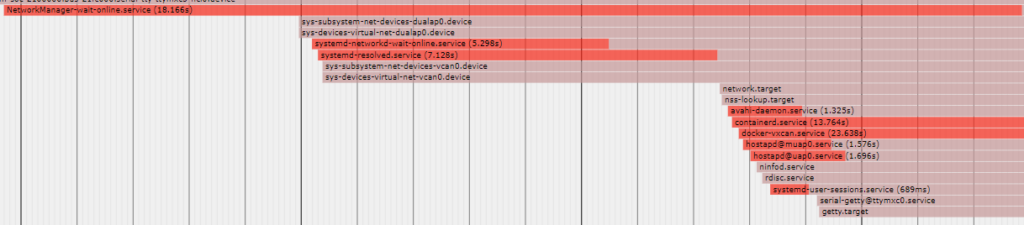

123ms dev-zram0.swapCode language: JavaScript (javascript)Because blame doesn’t show any dependency information, systemd-analyze plot > file.svg was the next tool in my quiver to help. This command will generate the same information as blame but will place it all in a nice plot form, so you can see what services started first, how long each took, and also pick out some dependencies between services.

My main use of the blame and plot commands was to identify services that were obviously taking a lot of time, but to also identify services that were simply not required. systemctl –type=service also helped with this process by simply listing all the services that systemd had enabled.

Dependencies can still be hard to definitively spot when just looking at the plot, however. Because of that, the systemd-analyze dot ‘<pattern>’ command is really handy. When optimizing my boot time, I would use blame and plot to identify potential culprits and then run them through dot to see how they were related. For example, I found that my network configuration was taking an abnormal amount of time, so I took a look at systemd-analyze dot ‘*network*.*’ to see how the systemd-networkd and related services were interacting one with another. This led me to some information that helped me understand that half of the services that were being started in support of the network were not actually required (such as NetworkManager and the IPv6 support services). By disabling those, I was able to save over 30 seconds of boot time, simply by removing a couple of services that were not required.

Finally, I was able to make use of the systemd-analyze critical-chain command to view just the critical chain of services. This command simply prints the time-critical chain of services that lead to a fully-initialized system. Only the services taking the most amount of time are shown in this chain.

user@localhost:~$ systemd-analyze critical-chain

The time when unit became active or started is printed after the "@" character.

The time the unit took to start is printed after the "+" character.

multi-user.target @1min 21.457s

`-docker.service @1min 3.755s +17.683s

`-fio-docker-fsck.service @26.784s +36.939s

`-basic.target @26.517s

`-sockets.target @26.493s

`-sshd.socket @26.198s +258ms

`-sysinit.target @25.799s

`-systemd-timesyncd.service @23.827s +1.922s

`-systemd-tmpfiles-setup.service @23.224s +518ms

`-local-fs.target @22.887s

`-var-volatile.mount @22.596s +222ms

`-swap.target @22.393s

`-dev-zram0.swap @22.208s +113ms

`-dev-zram0.device @22.136sCode language: JavaScript (javascript)This information is also useful because it is more of a quick and dirty way of getting to the same information as using blame, plot, and dot separately. However, because it doesn’t show all services, it can only help you optimize the worst offenders.

Application Optimization

Finally, after all the kernel and service optimizations, I still was seeing that a couple application services were taking the majority of the time to start up. Specifically, these applications were Docker and a Docker plugin for managing CAN networks.

These services were the last of the service chain to start up, so there were no other dependent services they were waiting on. Once the network was up and configured, these services would start up. Because they were not dependent on any other services, I was able to laser focus in on what was causing those applications to take so long to start and optimize them directly.

First, Docker Compose was taking well over two minutes to start and load containers. Second, my Docker plugin for CAN networks was taking well over 20 seconds to startup as well.

When I checked my version of Docker Compose, I found that it was still running a Python-based version of Compose, rather than the newer and faster Golang-based version. By upgrading my version of Compose, I was able to reduce my startup time from well over two minutes to about 1 minute 40 seconds.

I also found that the CAN network plugin was written in Python as well. So, rather than continue using it, I rewrote that plugin in Rust, which also gave me the opportunity to fix a couple of shortcomings I found in the plugin itself. This reduced the initialization time of the plugin from over 30 seconds to under a second — a huge savings!

488ms docker-vxcan.serviceCode language: CSS (css)Conclusion

Overall, this was a great exercise for me in the steps to optimize the boot process of a Linux system. While I certainly could optimize the system some more, I believe the gains to be had will be minimal — at least until I have some new hardware with the flash memory interfaces running at full speed. Then I can revisit this and see if I can get things any faster!

What is your experience with speeding up Linux boot and initialization on embedded systems? Any tips and tricks you would like to share? Comment them below!

Leave a Reply